Ensure smoother testing with Test Data Management

In the last blog, we saw the challenges organizations face in getting adequate test data for their test environments. The inability to pull in enough test data often impacts product quality, costs, and time to market. Test data management (TDM) services can overcome these challenges to ensure a smooth testing process with better test coverage across multiple environments and scenarios. TDM also ensures that the data is secure and masked and that there are no leakages. How does it do that? Let’s take a look.

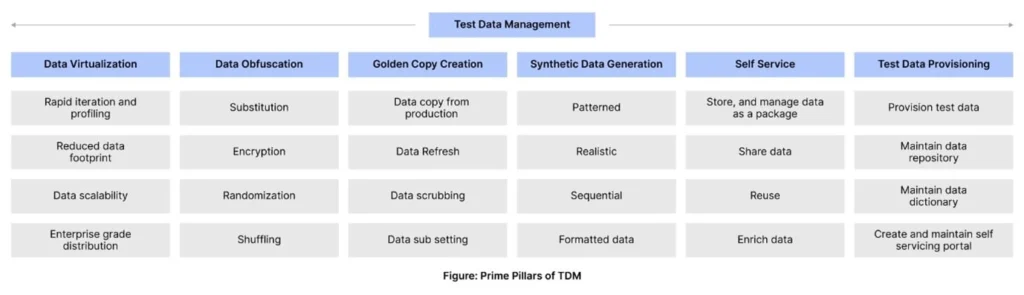

The prime pillars of Test Data Management

Test Data Management services need to do these prime key things to meet the data needs of test environments. These include Data Virtualization, Data Obfuscation, Golden Copy Creation, Synthetic Data Generation, Self Service and Test Data Provisioning (See fig).

Figure: Prime pillars of TDM

- Data Virtualization – Data virtualization provides the ability to securely manage and distribute policy-governed virtual copies of production-quality datasets. Data virtualization is the critical lever used by forward-thinking enterprises to provision production-quality data to dev and test environments on demand or via APIs. (Source: Delphix)

- Data obfuscation – To prevent data leakage and ensure the security of sensitive data, TDM disguises the data it makes available to the testers. The tool will create a replica of the actual data that is masked or encrypted or a substitute.

- Golden copy creation – Developers and testers cannot directly access production data to prevent data corruption. A golden copy is the exact replica of the production data set that mirrors the production environment giving testers access to relevant data without creating any issues in the production environment. A golden copy also ensures data refresh every time there is a data update/ batch run in production. This ensures that the testing teams always have access to the most recent data set. However, often the production environment hosts a massive set of data with billions of data points. This volume of data isn’t necessary for testing, and hence the golden copy scrubs and sub-sets that data. This means removing unwanted, unnecessary data sets and making different copies of the most important data sets.

- Synthetic data generation – To reduce dependence on production and development servers, TDM also helps synthesize similar data sets. Once the system understands the pattern or sequence of transactions, it can create a very similar sequence. To get data for a scenario, testers need to feed the scenario into the TDM tool, which will generate data based on identified sequences and patterns.

- Self Service – Instead of relying on IT ticketing systems, an advanced TDM approach puts sufficient levels of automation in place that enable end users to provision test data via self-service. Self-service capabilities should extend not just to data delivery, but also to control over test data versioning. For example, testers should be able to bookmark and reset, archive, or share copies of test data without involving operations teams. (Source: Delphix)

- Test Data provisioning – Makes data available whenever and wherever needed in the right way. This requires creating and maintaining a data repository for multiple scenarios and enabling self-service capabilities for testing teams to get instant access to data.

You need an expert TDM partner

As it stands, the current state of test data management leaves much to be desired. As development and testing teams scramble for timely, relevant data, valuable time is lost. Managing test data professionally ensures that for any number of releases from different stages of testing, a golden copy of the data – scrubbed and encrypted – is available on demand in real time. While this may sound simple, it’s not easy. To ensure data availability, you need to understand what data is needed in the test environment and at what stage. You also need to understand the scenarios to generate specific data. Then, the TDM tools must be analyzed to determine the best fit for your unique needs. Once the tool is identified, test data creation starts. The tool is also integrated with DevOps tools to push it into any environment. Once the data is used, it can also be reset to its original state, ready to be used again, eliminating the need for multiple data sets. However, to ensure TDM runs smoothly, this entire approach must be designed initially. Getting an expert TDM partner on board early on will ensure your approach is fail-proof and aligned with the unique TDM needs of your business.